What are the files Robots.txt and SiteMapRSS.xml?

This document explains what Robots.txt and SiteMapRSS.xml are, and how they pertain to your XSite.

Document 7035 | Last updated: 02/15/2017 MJY

The SiteMapRSS.xml and Robots.txt files are used to help search engines index a website. The Robots.txt file contains a list of pages that are not to be indexed in a search engine, while the SiteMapRSS.xml contains a list of all the pages that you do want indexed on a search engine.

In the My Content section of your XSite Admin, when you check the box to enable a page on your XSite, that page is removed from the Robots.txt file and placed in the SiteMapRSS.xml file. Likewise, the opposite happens when you uncheck a page to disable it — the page's URL is removed from the SiteMapRSS.xml file, and added to the Robots.txt file.

Typically, you don't have to do anything with these pages — they are automatically updated any time you make a change to one of your pages. However, there are a couple of circumstances when you may have to force them to regenerate:

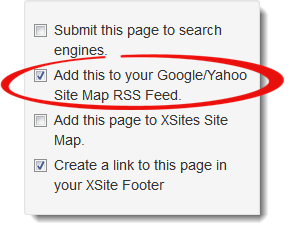

- If you are getting a message in Google’s Webmaster Tools stating that “SiteMapRSS.xml contains pages that are blocked by Robots.txt,” then you need to disable the option for Add this to your Google/Yahoo Site Map RSS Feed under the page that is being blocked in Robots.txt.

- If you receive a message in Google's Webmaster Tools stating that SiteMapRSS.xml or Robots.txt is blank, then simply toggle the checkbox on for a page in My Content, then off again to force the files to regenerate.